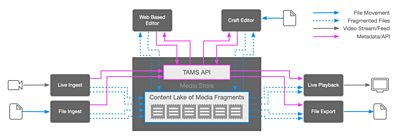

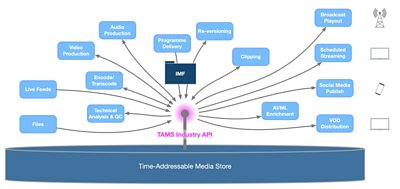

This time last year, BBC Research & Development published the Time-Addressable Media Store (TAMS) API, offering a new way of working with content in the cloud. It’s an open specification that fuses object storage, segmented media and time-based indexing, expressed via a simple HTTP API. It lays the foundations for a multi-vendor ecosystem of tools and algorithms operating concurrently on shared content via a common interface, blending the best of live and file-based working.

Earlier in the project, before publishing the API, we built prototype implementations and ran real-world trials to validate the design. This gave us a high degree of confidence that the TAMS approach would be viable in practice.

Since then, we’ve been busy!

A group of forward-thinking solution architects at Amazon Web Services picked up on the API and were excited about the possibilities. They were particularly interested in the potential of TAMS to streamline the process of fast-turnaround edit in the cloud in an open, modular way - one of our top priorities. After some further discussion to cement and validate our collective enthusiasm, AWS proposed forming a collaborative project with a number of partners to build an end-to-end proof-of-concept demonstrator of a Cloud-Native Agile Production (CNAP) workflow based on TAMS.

We worked with AWS and their partners to build a shared understanding of the specification and to address feedback on the API. We augmented the spec with application notes to document and explain how the concepts embodied in the API help to solve real-world problems. The technology vendors among the project participants set to work making their media production tools talk to the TAMS API. It wasn’t long before some achieved a basic working integration. The media companies in the group (including some of our BBC colleagues) responded positively to the potential of TAMS to unlock some of the conundrums of cloud-based production today.

If you’re at IBC this year, you can see the results on the AWS stand (Hall 5.C90).

This has been an invaluable phase of work, proving how a simple open API can be used to bring interoperability between tools developed by a diverse group of vendors, laying solid foundations for the next wave of development. In the process, the TAMS API has benefited from the refinement that only broader review and practical integration can bring. We’re delighted that the industry’s journey towards realising the full potential of time-addressable media has begun.

What is TAMS?

What exactly do we mean by a 'Time-Addressable Media Store'? What differentiates it from prevailing approaches? And why did we feel it needed to exist?

To answer these questions, let’s take a couple of steps back so we can see more of the landscape. The media industry is in transition. Software is taking over our world. This has been building momentum for some time, enabled by the shift towards the use of high-speed data networks replacing AV-specific cables and routers, relentless advances in compute capabilities and the new paradigms introduced by cloud computing. BBC R&D has been instrumental in introducing many of these enablers to the world of media, from our role in the birth of SMPTE ST 2110 through our foundational contributions to the JT-NM Reference Architecture and the NMOS family of specifications, to more recent work on Dynamic Media Facilities.

The shift to software certainly promises flexibility benefits, but it’s not enough on its own to solve the problems of scalability and interoperability. Simply replacing signal processing with software won’t move us beyond the limitations of workflows designed originally for coax cables and tapes.

The concepts and principles behind the Time-Addressable Media Store aim to provide some of the missing pieces of the puzzle:

TAMS is designed to be cloud-native. Media is stored in short-duration segments in HTTP-accessible object storage. Strong, unique identity is bound to each mono-essence media element (termed a Flow, drawing on the JT-NM Reference Architecture), along with an immutable time-based index. This allows reliable, granular access to a shared repository of media via an HTTP API. Resilience is achieved through best-practice cloud-native architecture for high availability rather than a more broadcast-conventional configuration of static redundant systems.

For maximum flexibility, media elements are stored separately as mono-essence, but they can also be stored in multiplexed form for applications where the need for simplicity overrides the need for flexibility. Synchrony between Flows is expressed through their relationships to a common timeline.

These fundamental design decisions have a number of benefits:

Simultaneously supports multiple formats, and doesn’t differentiate

All audio-visual media exists on a timeline. In TAMS, the timeline is explicit, and is used to reference the media through its API. At the top level, the media type and format don’t matter. In this world, all media types and formats are equal (audio, video, unconventional or not-yet-invented formats) and can be supported in any combination.

Efficient workflows that avoid replication

TAMS facilitates storing media once and using it by reference rather than repeatedly copying media around from place to place as files or streams. It even allows new Flows to be constructed from pre-existing segments. Basic operations like time-shifting, clipping, or simple assembly can be achieved without knowing the media type or format, described purely in terms of timelines.

Extensible

When media elements are stored separately as mono-essence, new elements can be freely added at any time and used in unlimited different combinations. Association of mono-essence Flows into virtual multi-essence Flows is supported natively in the TAMS data model.

Supports live and fast-turnaround workflows

TAMS starts from the principle that everything is ingested into the store as early as possible in the workflow, whether it originated as a live stream or a file. Storage of media in short-duration segments reduces the time between writing media to the store and having it available for access. In the case of live stream ingest, media can be made available very close to the live edge. Segment duration can be tuned to achieve the desired balance between latency and cost. This makes TAMS well-suited to fast-turnaround clipping or edit workflows, as we have seen in the Cloud-Native Agile Production project. But once the media is available, it remains available. The scalability of object-based storage in the cloud means that deletion of media from the store is a choice driven by cost rather than a necessity.

Asynchronous and parallel working

Unlike signal-centric systems, TAMS access is asynchronous. Once media is ingested into the store, it can be accessed serially or in parallel; sequentially or randomly; close to the live edge or days into the future. Because the timing relationships between the media are embedded with the media, operations can occur independently on different elements at any time, maintaining the timing information through the process so that synchronisation can be reconstructed when it’s needed. This is a very powerful characteristic that makes possible real-time or faster-than-real-time processing and analysis, taking full advantage of the scalability of serverless architectures.

Cloud-agnostic deployment

“Cloud-native” doesn’t imply that TAMS is wedded to one cloud provider. The API specification itself has no opinions or dependencies on specific providers’ features or services. A TAM Store could be implemented to be deployed on private/on-premise cloud, or in a self-contained appliance. Essence storage is a well-separated concern, with segments accessed via URLs, so storage solutions can be chosen on a flexible and dynamic basis, independently of the service that implements the index and API endpoints. We’ve verified this model in our own prototype implementations, deployed across a hybrid of AWS and OpenStack cloud with Ceph object storage. If you’re interested in taking a look at an example design that targets AWS infrastructure, their open-source implementation is here.

Versioned packaging and media interchange

Sometimes it’s useful to bundle up all the assets that contribute to a media presentation into a self-contained package, for example, to ship a finished programme between organisations. The TAMS data model shares many of the attributes of formats like Interoperable Master Format (IMF) - unsurprising since these formats were one of our inspirations. These formats have a complementary role to play, and identity and timing information can be maintained through media delivery and receipt workflows that use them.

Where Next?

The AWS CNAP project has focused on the use of TAMS in fast-turnaround production workflows and has demonstrated its potential in this context. But TAMS really starts to come into its own when it is applied more broadly across the media supply chain, and used as a mechanism for sharing media between traditionally siloed workflows. It offers a future where live and pre-packaged content can be freely mixed in the same environment, with opportunities for converged, more consistent, more efficient workflows that serve both. Supply chain operations can occur in parallel or out-of-order in many cases, with media assembly described purely in metadata that references media elements in the store.

This won’t happen overnight, but TAMS and the principles it embodies are a significant step towards that future. The most critical principle of all is that it is open and extensible, available to all as a common language to promote interoperability and support innovation.

If you’d like to know more, please come and see the CNAP demo at IBC on the AWS stand (5.C90). Come along to the panel session 'Re-inventing Fast-turnaround News and Sports Workflows for the Cloud' at 11:15 on Sunday 15th Sept in the AWS and NVIDIA Innovation Village (14.A13). TAMS will also feature in the EBU Open Source Meetup on Saturday 14th Sept at 16:00 (10.D21). Or you can contact us directly on [email protected].